The Great Decoupling: Why AI Infrastructure Is Still Stuck in 2015

We're building AI hardware like it's still 2015. And it's costing us billions.

I've spent 25+ years in AI/ML—I was the first to put an NVIDIA GPU into a self-driving car, ran AI workloads on Yahoo's Hadoop clusters, spearheaded AI for a trillion-dollar investment firm, served as head of AI at a datacenter company, applied AI across dozens of startups, and co-founded a health tech company that Apple acquired. I've watched the AI hardware market evolve from scrappy GPU clusters to hundred-thousand-chip supercomputers.

And I've watched companies make the same expensive mistake over and over: treating training and inference like they're the same workload.

They're not. They haven't been for years.

Every dollar wasted on this assumption comes back to the same problem: we're still using general-purpose AI accelerators for both training and inference when specialized hardware would be far more cost-effective.

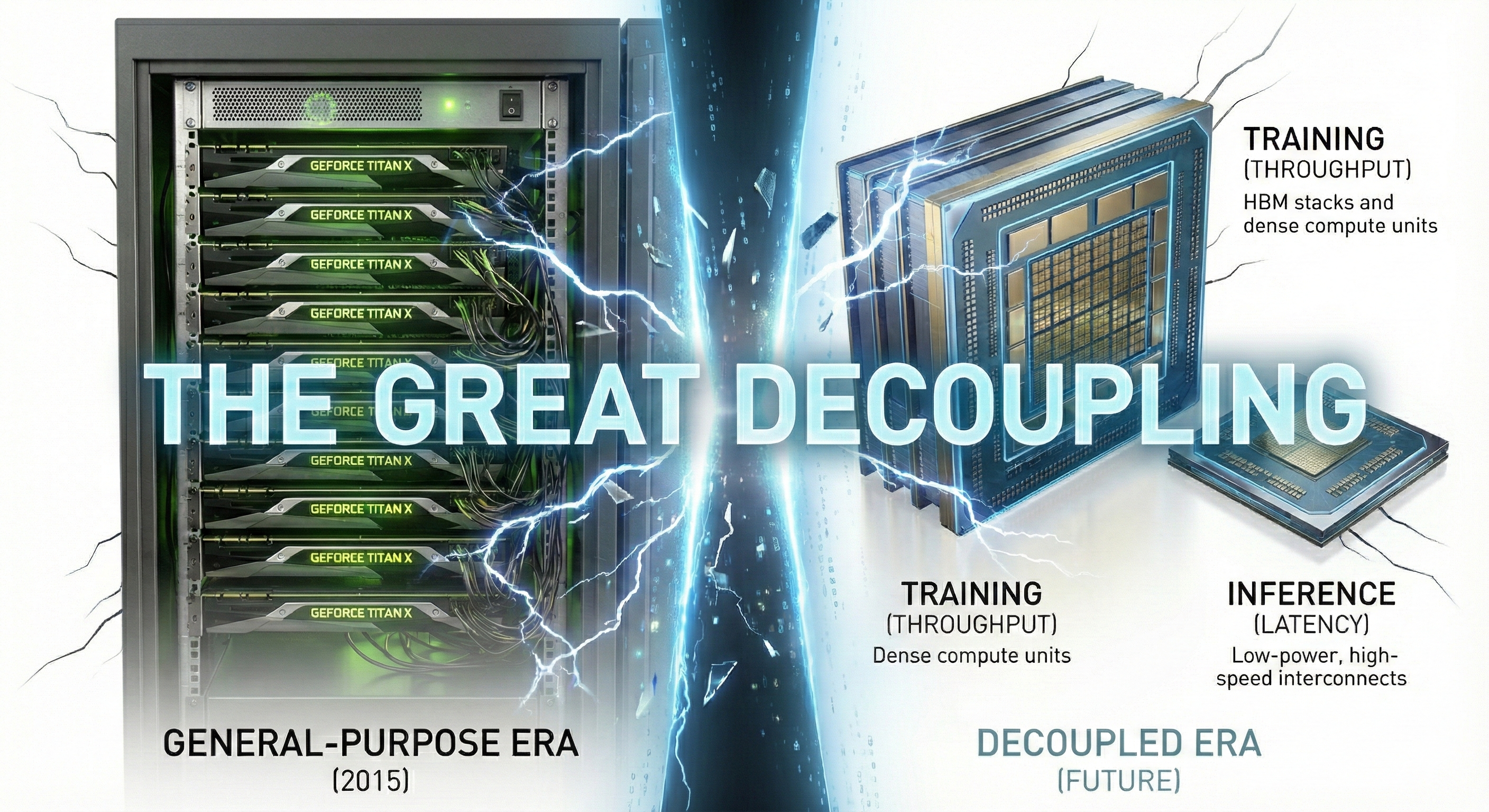

The World Changed. The Hardware Didn't.

In 2015, this made sense. Small teams trained models on Tuesday and served them on Wednesday. A single V100 cluster handled your entire AI lifecycle. "Scale" meant thousands of requests per second, not billions.

That world is gone.

Training centralized brutally (for frontier foundation models):

- 10,000-100,000 GPU clusters running for months

- $100M-$1B per training run

- Handful of companies globally who can afford it

- Models trained once every 6-18 months

- Thousands of engineers supporting each run

Inference exploded everywhere:

- Billions of requests daily

- Sub-100ms latency requirements

- Running on everything from data center clusters to iPhones

- Dominated by power budgets (measured in milliwatts on edge devices, single-digit watts on phones)

- Cost-per-token has become a critical bottleneck for LLM-centric AI businesses

Yet we're still largely using the same hardware architecture for both. Yes, Blackwell has better inference efficiency than its predecessors, but it's still fundamentally designed as a general-purpose architecture. Same CUDA stack. Same frameworks. Same core compromise.

This is like using a freight train for both cross-country shipping and your daily commute. Technically possible. Economically insane.

The Real Cost

The numbers are staggering, and I've seen them firsthand across multiple client engagements.

On the training side: Shaving 20% off a training run by using hardware that's 30% worse at inference but 40% better at training? That's $100M+ saved on a single foundation model. One run.

On the inference side: I've worked with startups running inference on A100s—the same GPUs they used for fine-tuning. $47K/month in compute costs. We moved inference to purpose-built hardware. Same latency. Same throughput. $9K/month. That's $456K saved annually for one startup on one workload.

Scale that to OpenAI or Anthropic, and you're talking billions in annual costs that could be reduced by 10x through specialized hardware.

And it doesn't stop there: we're paying for HBM3e (High Bandwidth Memory) on inference nodes when 90% of the weights don't require that level of premium bandwidth for 80% of user queries. It's buying a Lamborghini when you need a delivery van.

What Decoupling Actually Enables

When you stop compromising for both workloads, you can optimize each aggressively.

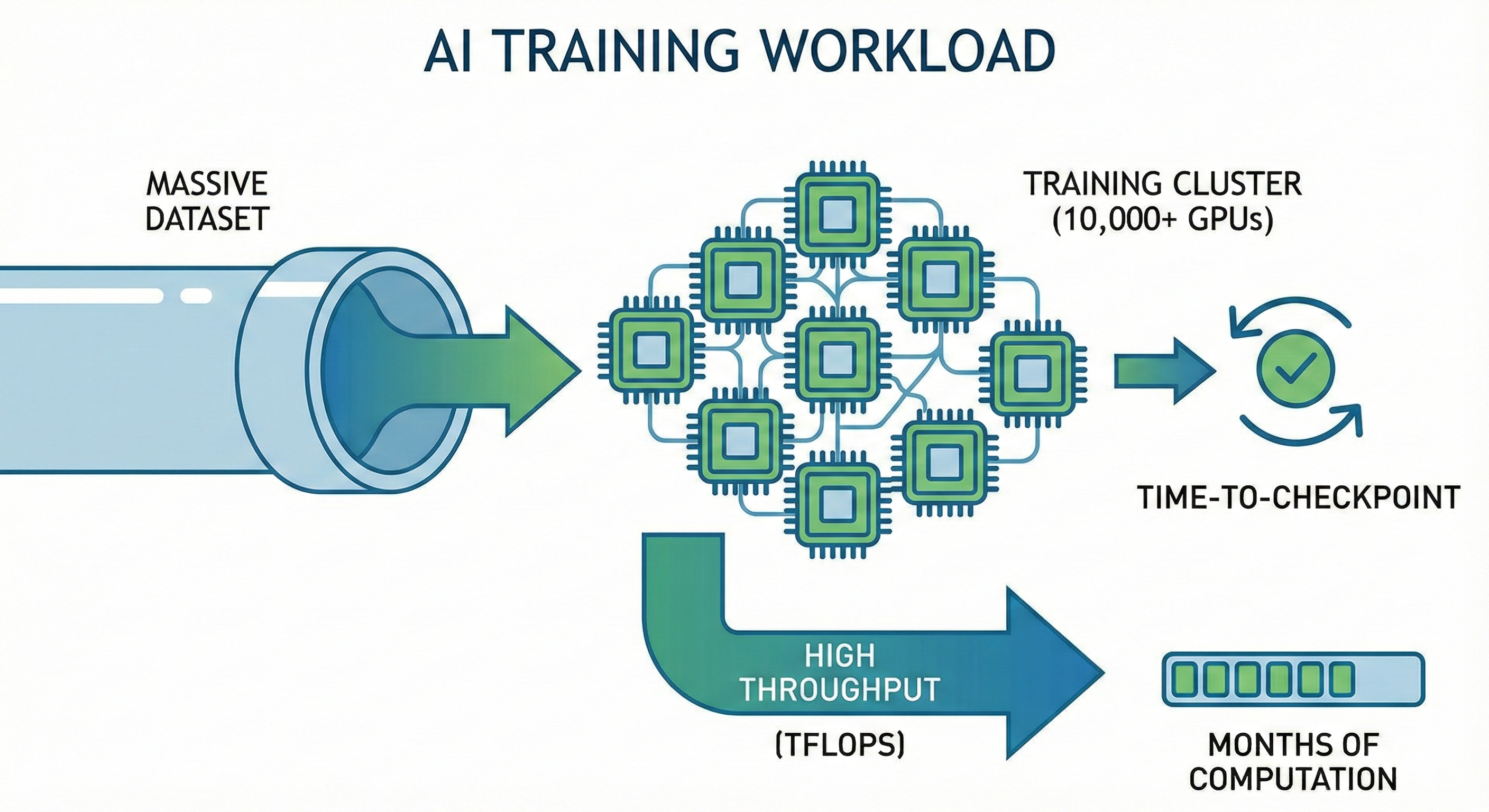

Training Hardware Unleashed

Hardware-level checkpointing. At 100,000 GPU scale, hardware failure is the #1 training cost killer. Specialized training hardware should checkpoint at the silicon level, not rely on distributed systems prayers.

Memory bandwidth over everything. Training is throughput-bound, not latency-bound. Pack in massive HBM bandwidth even if individual access latency is higher. Batch sizes in the thousands. BF16/FP8 precision with loss scaling.

Ultra-fast interconnects. NVLink, Infiniband at 400Gb+. Training clusters need GPUs talking constantly. Most inference workloads are far less interconnect-intensive—though large distributed inference setups are an exception.

Power takes a back seat to throughput. Yes, hyperscalers care about power density and cooling, but for training the priority is cost-per-FLOP. A training cluster consuming megawatts is acceptable if it delivers results faster.

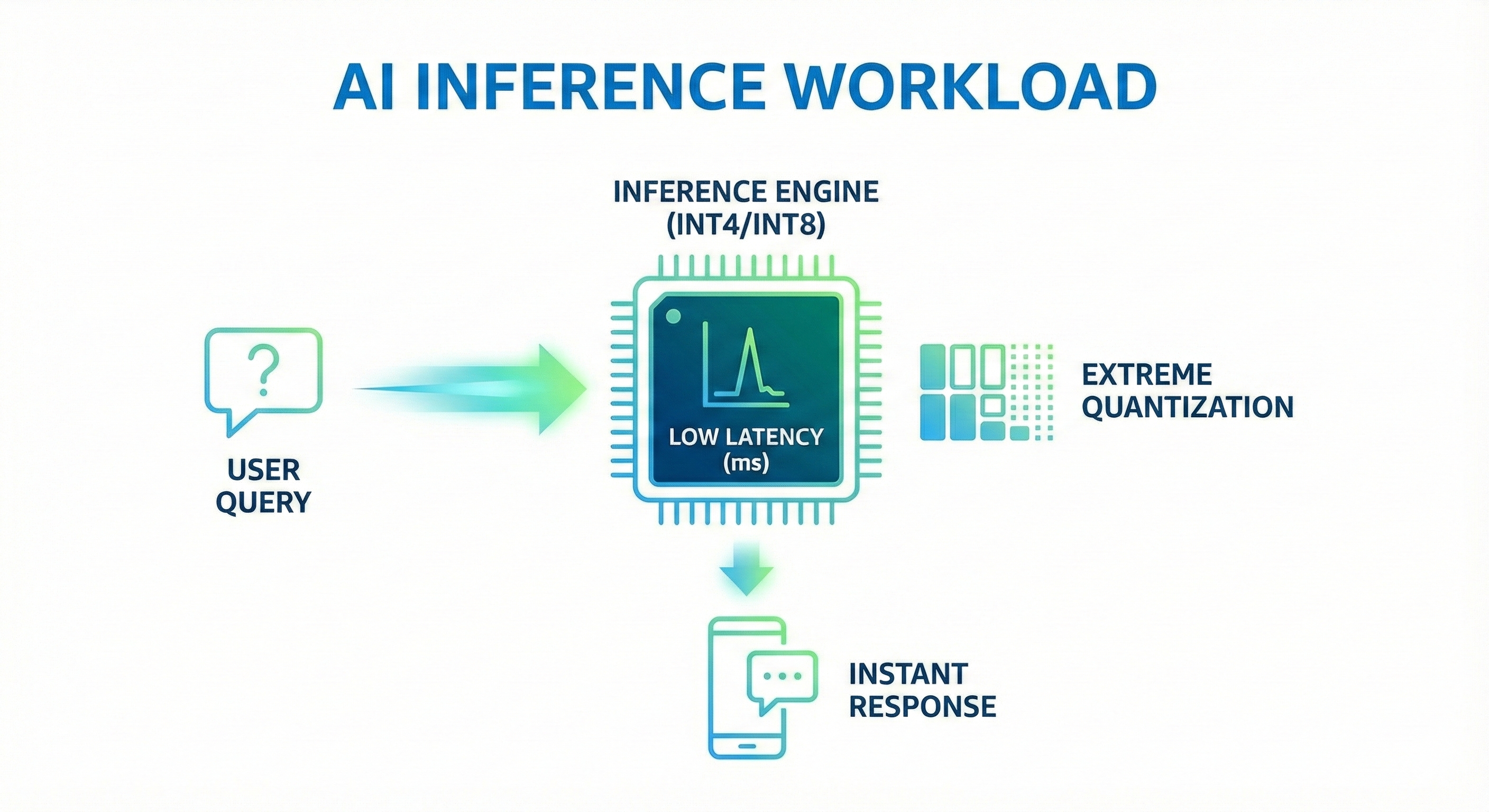

Inference Hardware Unleashed

Extreme quantization. INT4, INT2 for certain layers equals 10-16x memory savings. Training hardware struggles with this because maintaining gradient precision is critical. Inference hardware doesn't care—the weights are frozen.

Speculative decoding pipelines. Small model guesses for big model—dual-stream execution. Training hardware optimizes for single-path gradient flow. Inference hardware should be built for this from the ground up.

Aggressive sparsity exploitation. Up to 50-90% of weights can be zeroed in some pruned models. Training needs dense weights for gradient updates. Inference can skip entire matrix blocks where sparsity permits.

Joules-per-token, not raw throughput. Actually run on-device at phone power budgets (1-5 watts). Training hardware optimizing for this would be nonsense.

The KPIs Are Different Too

Here's another way to see why training and inference can't share hardware: they don't even measure success the same way.

LLM Inference performance comes down to:

- TTFT (time-to-first-token): The delay from prompt to first response—what makes your product feel fast or slow

- TPS (tokens-per-second): How quickly subsequent tokens stream—what determines your cost-per-conversation

LLM Training performance comes down to:

- Throughput (samples/tokens per second): How much data you push through your cluster—your cost efficiency

- Time-to-checkpoint: How fast you recover from hardware failures—your risk management at 100,000 GPU scale

General-purpose hardware compromises on all four metrics. Specialized hardware can actually optimize for what matters in each workload.

When you ask 'what GPU should we buy?' you're really asking 'which two metrics do I actually care about?' Because general-purpose hardware won't excel at any of the four.

Why This Is Taking So Long

The shift is happening, but incompletely.

AWS already has Trainium for training and Inferentia for inference. Tesla and Waymo run entirely different inference stacks than they use for training perception models—they figured out years ago that the car can't wait for a training-optimized FLOP.

Even NVIDIA recognized this. Their non-exclusive licensing agreement and talent acquisition from Groq in late 2025 wasn't about protecting their general-purpose GPU moat—it was an acknowledgment that specialized inference was inevitable. They acquired the LPU (Language Processing Unit) compiler expertise to bridge between training dominance and the instant-inference world.

But the incentives remain misaligned. Hyperscalers have billions in brand-new infrastructure they need to depreciate over 3-5 years. Enterprise customers want one SKU, one maintenance contract, one training program for ops teams.

When you've got that much capital tied up in general-purpose infrastructure, market fragmentation into specialized silicon threatens your business model—even if it's technically superior.

This is path dependency at planetary scale. The shift will accelerate from the edges: companies with no sunk costs, no CUDA moat to protect, and everything to gain from purpose-built systems.

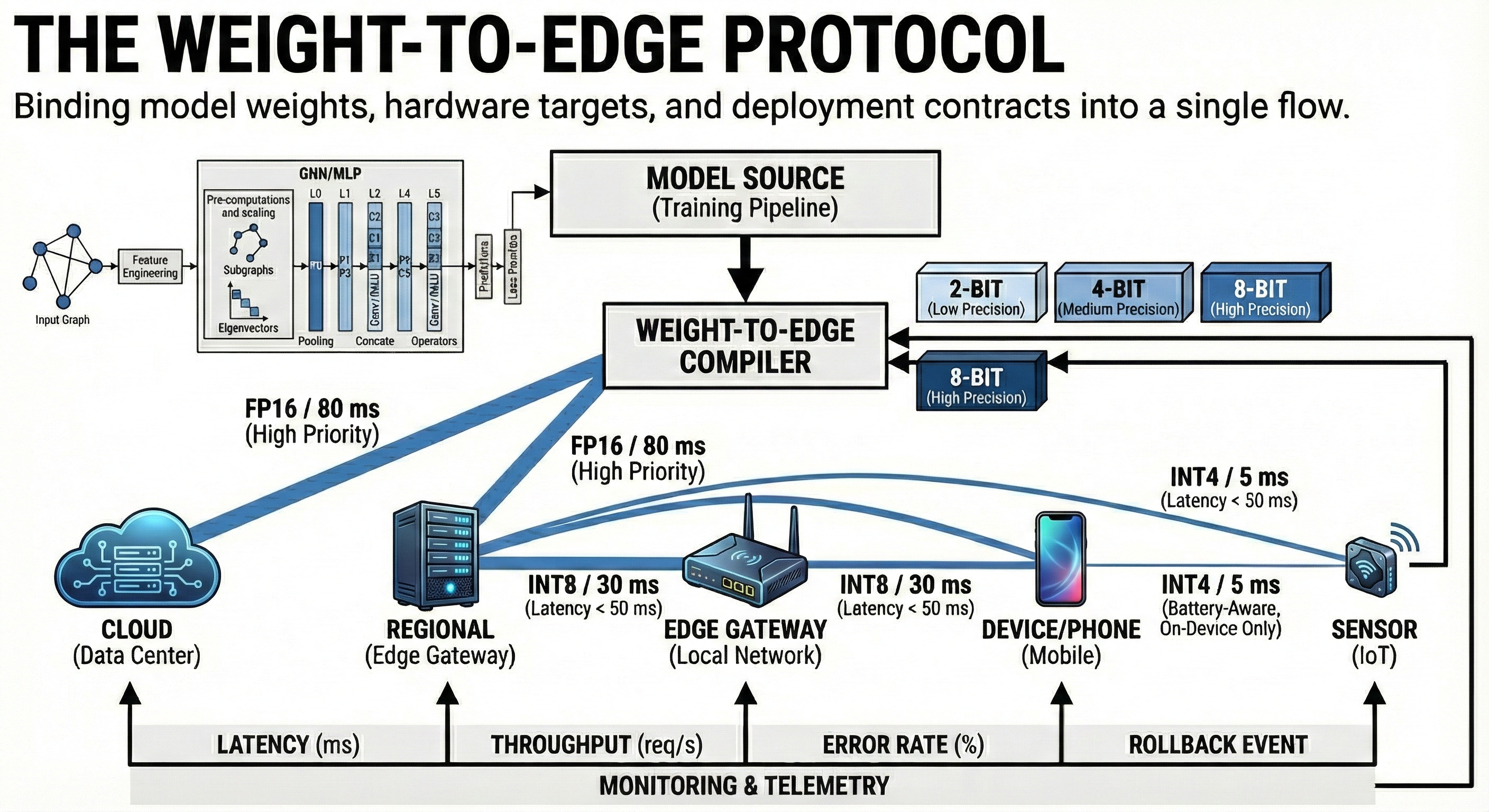

The Missing Piece: The Weight-to-Edge Protocol

Here's where everyone is actually stuck, and it's the most important part.

We're living in the Dark Ages of Pickles and Safetensors. And it's worse than I thought—I recently had someone dig into the current state of model export standards, and what they found confirms everything I've been seeing in the field.

Some of this exists, but in fragmented, incompatible ways that make it nearly useless. Here are the five pieces we need for a true Weight-to-Edge protocol:

1. Export Formats with Quantization Statistics Built In

Current state: Partially there. ONNX, TFLite, TensorRT, and GGML/GGUF can store quantization scales and zero-points, but each does it differently. There's no standard. Tooling still treats quantization as an optional post-training step, creating multiple incompatible variants of the same model instead of one canonical artifact with well-defined quantization built in.

2. Calibration Datasets as Required Metadata

Current state: Rarely standardized. INT8/PTQ (Post-Training Quantization) flows let you point to a calibration dataset during export, but the dataset isn't packaged with the model. There's no convention where a model can't be published without bundled calibration data or at least a reference to it.

3. Performance Contracts in Model Artifacts

Current state: Nope. Frameworks provide benchmarking tools and example latency/throughput tables, but these are side documents or config files—not structured contracts that ship with the model. There's no schema where a model file carries machine-readable SLOs (Service Level Objectives) that deployment systems can validate automatically.

4. Hardware-Aware Compilation Hints

Current state: Fragments. Export processes let you target hardware-specific optimizations, but these are parameters you pass during export, not persistent hints in the model that downstream compilers understand. Some formats like TensorRT engines are tightly bound to specific hardware, but that's a "baked-for-this-target binary," not reusable hints.

5. Versioning and Rollback That Actually Works

Current state: Only if you built good deployment infrastructure. Model registries and serving platforms implement version tags and rollback policies, but these are external orchestration features. The model files themselves—ONNX, safetensors, whatever—know nothing about deployment history or rollback semantics.

So in today's ecosystem, you have:

- Items 1 and 4 partially, but fragmented and backend-specific

- Items 2 and 3 mostly don't exist as first-class model metadata

- Item 5 only where organizations built mature deployment platforms

This fragmentation forces three expensive steps at every company:

- Export → convert → re-quantize (weeks of engineering time)

- Re-benchmark blindly (no performance contracts)

- Debug deployment mismatches (no standard hints)

Every handoff from training to inference requires weeks of custom work. Every deployment is a bespoke integration nightmare.

Right now, teams export a model from their training cluster and then spend weeks figuring out how to make it run efficiently on inference hardware. That's backwards.

The training system should export inference-ready artifacts, not raw checkpoints that need post-processing.

Someone needs to own the handoff. Right now, nobody does. And until someone does, the "Great Decoupling" will remain half-finished—leaving billions on the table.

The Hydration Problem Nobody Talks About

Because we don't have a Weight-to-Edge protocol, we also have a "Hydration Problem."

The time it takes to load massive weights into specialized memory before the first token is even generated.

You've exported your model, you've got your inference hardware, and now you need to actually load gigabytes of weights from storage into HBM before anything can run. For large models, this can take minutes. Every cold start. Every scale-up event. Every deployment.

This isn't a technical curiosity. It's a direct cost:

- Cold starts delay customer requests

- Autoscaling becomes expensive (you can't spin up new nodes fast enough)

- You keep extra capacity running "just in case" because spinning down and back up is too slow

Specialized inference hardware could solve this with smart weight streaming or persistent weight caching at the silicon level. But because we're using general-purpose training hardware for inference, we're stuck with naive "load everything into RAM" approaches.

This is another symptom of the same disease: no clean handoff protocol between training and deployment means every team reinvents these wheels badly.

The Action Plan: From Theory to Profit

These aren't theoretical problems. They're costing you real margin every hour your racks are running.

We're at a rare moment where the AI hardware market is still fluid and dominant designs haven't fully ossified. The companies that recognize this decoupling early will capture disproportionate value; those that don't will watch their margins evaporate.

Here's exactly how to start, based on your seat at the table:

For Infrastructure & Finance Leaders

The Advice: Stop asking "what GPU should we buy?" and start asking "what's our training vs. inference budget split?" Build separate systems for each.

The Day 1 Task: Pick your highest-volume inference workload. Move it off your training cluster onto purpose-built inference hardware (LPUs or ASICs). Measure the delta. If you save 3-5x—which is what I consistently see in my client engagements—you have the internal business case for full decoupling.

For Model & Tool Developers

The Advice: Stop treating deployment as "someone else's problem." If your model is hard to deploy, it's a bad model.

The Day 1 Task: Export your next model with a "Weight-to-Edge" mindset. Include INT4/INT8 quantization metadata and a calibration dataset in the artifact itself. Make your deployment story the primary reason customers choose you over a "raw-weight" competitor.

For Procurement & Hardware Evaluators

The Advice: Demand clarity on specific workloads. General-purpose benchmarks are a trap designed to hide inefficiency.

The Day 1 Task: Ask every vendor two "deadly" questions:

- "Show me your TTFT benchmarks at batch-1 for our specific context window."

- "Show me your throughput benchmarks at 100,000 GPU scale."

If they give you the same hardware SKU for both answers, walk away. You're being sold a compromise you can't afford.

The era of general-purpose AI hardware is ending. The "Inference Flip" where inference spend surpassed training globally shows that the real money is in execution, not just creation. NVIDIA's pivot into the Groq ecosystem was the final confirmation: specialized infrastructure is no longer a niche—it's the new baseline.

The decoupling is happening. With you or without you.

Publish January 2025