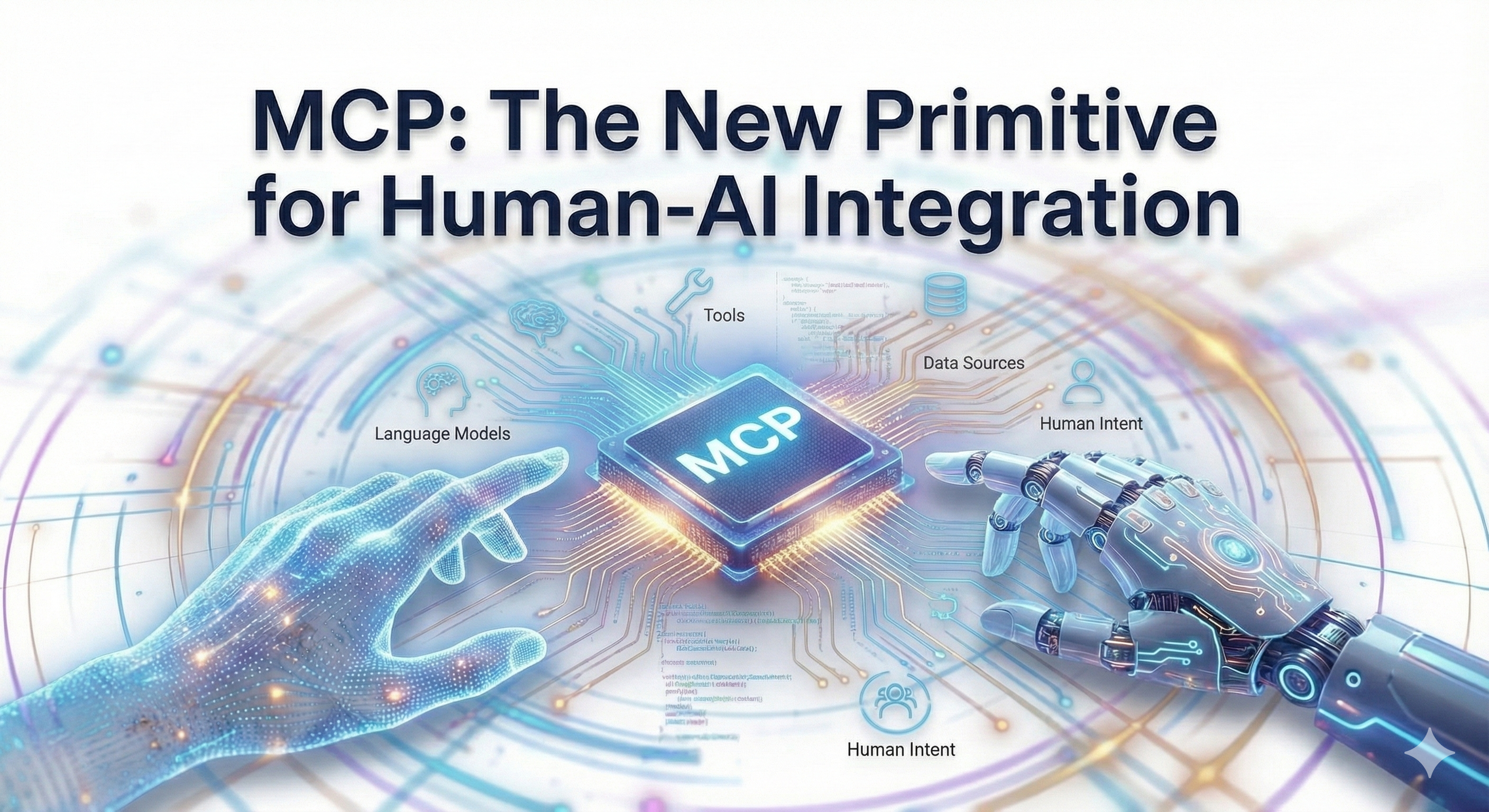

MCP: The New Primitive for Human-AI Integration

Everyone's asking the wrong question about MCP.

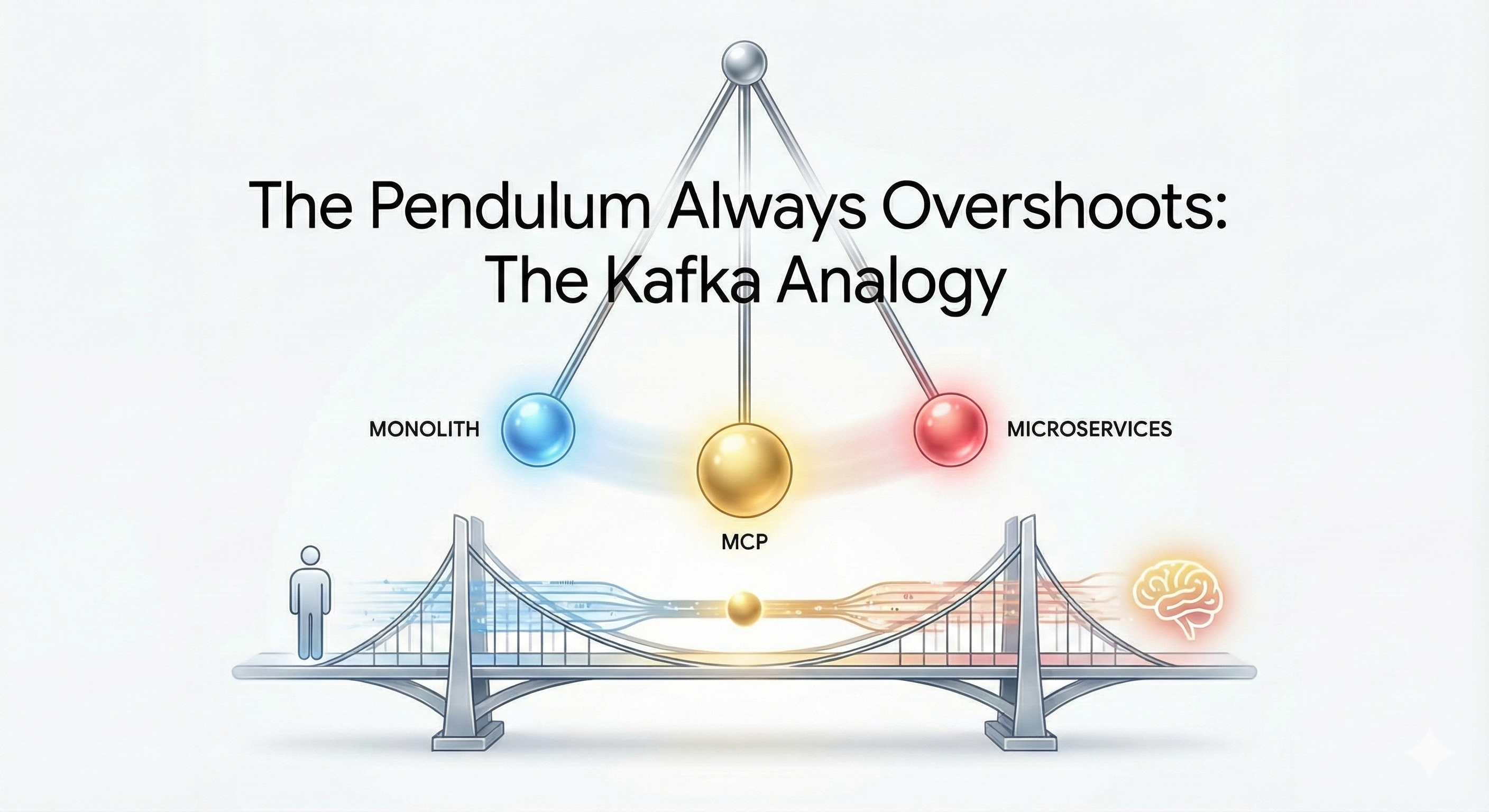

I read at least three articles last week arguing that LLMs will bring us back to monoliths. The logic: "AI can understand the whole codebase now, so why maintain boundaries?" That's like asking whether Kafka killed microservices. It didn't—it made them work better by providing the right communication primitive.

This is exactly the kind of pendulum overshoot I've watched happen for 25+ years.

MCP isn't an architecture. It's a communication pattern. And just like Kafka decoupled services through event streams rather than consolidating them into monoliths, MCP decouples human intent from implementation without collapsing architectural boundaries.

The Pendulum Always Overshoots

Here's a pattern I've observed across 25+ years in tech: the larger an organization or community, the harder the pendulum swings past the optimal point toward the opposite extreme.

We went from monoliths to microservices—but didn't stop at "right-sized services." We kept going until we had teams deploying individual functions as separate services, drowning in orchestration overhead and distributed system complexity that served no business purpose.

This overshoot isn't accidental. It's structural:

- Consultancy capture: The bigger the market, the more money in selling radical transformation

- Career incentives: No one gets promoted for "we kept doing the sensible thing"

- Coordination lag: By the time 10,000 engineers pivot, the leading edge already knows it's wrong

- Narrative momentum: Conference talks, books, and courses become infrastructure supporting the extreme position

It reminds me of the real estate guru era of the 1980s and 90s. Real estate investor John T. Reed has documented this progression on his famous "Guru Rating" site. The pattern: each author had to make a more extreme promise than the last just to break through the noise. "Get rich with 20% down" was boring. So it became "10% down" then "5% down" then Robert Allen's bestseller "Nothing Down" (0% down). To beat that, others started promising "cash back at closing"—literally "they pay YOU to take the property."

Not every "nothing-down" guru was fraudulent—but enough collapsed or faced serious legal trouble that the pattern became undeniable. Allen filed for bankruptcy. Others faced criminal charges or civil judgments. Reed's documentation reads like a cautionary tale about what happens when promises decouple from reality.

Tech follows the same arc. A reasonable idea—"microservices improve deployment independence"—becomes "split everything into separate services" becomes "one function per service" becomes organizational dysfunction.

Now the pendulum is swinging back. And because we overshot toward microservices, the correction is overshooting toward monoliths. MCP is getting caught in this swing, mischaracterized as an architectural consolidation pattern when it's actually something else entirely.

The "AI-Induced Monolith" Fallacy

I've watched this movie before. Different technology, same logic, same outcome.

In 2010: "Cloud computing means we don't need infrastructure planning anymore."

In 2015: "Microservices means we can scale infinitely."

In 2020: "Kubernetes solves all our deployment problems."

Now in 2025: "AI can navigate complexity, so we should abandon service boundaries."

This is the ultimate pendulum overshoot. It mistakes comprehension for cohesion.

Just because an AI can read a 10-million-line monolithic codebase doesn't mean your organization can safely deploy it. I've been building systems since before most of these frameworks existed, and here's what hasn't changed: Architecture was never just about understanding—it's about managing operational reality when things go wrong at 3am.

Let me be specific about what breaks:

Blast radius matters. I've seen both scenarios up close. When an AI-suggested change breaks a microservice, you lose a feature. Customer impact: annoying. Recovery time: minutes to hours. When it breaks a monolith, you're down. Customer impact: catastrophic. Recovery time: hours to days.

Deployment velocity doesn't disappear as a problem. I spent many years in very large firm decades behind the tech curve. You know what hundreds of engineers trying to merge into a single binary looks like? Merge conflict hell that no amount of AI "understanding" fixes. You're just trading distributed systems complexity for human coordination nightmares.

Ownership doesn't disappear. An AI doesn't "own" a service at 3:00 AM when the database is locking up. Humans do. And humans need boundaries to know what they're responsible for. I've built teams across multiple continents—the moment accountability gets fuzzy, everything slows down.

The folder analogy I keep coming back to: saying we should move back to monoliths because of AI is like saying we should stop using folders on our computers because "Search" got better. Search helps you find things. Organization helps you manage things. You need both.

Here's what MCP actually gives you: The AI gets "global search" and cross-system reasoning capabilities—the cognitive benefits of a unified codebase. Your infrastructure stays decoupled into right-sized services—the operational benefits of isolation and independent deployment.

The principle: Comprehension doesn't require cohesion. The AI can understand your entire system without your entire system being one giant binary.

Stop asking whether monoliths or microservices are "better." Start asking: can an AI coordinate across our systems without forcing them back together?

That's what MCP enables.

What MCP Actually Is

MCP doesn't care about your service topology. It's a protocol for AI agents to interact with tools and data sources. Whether you have 5 macroservices or 500 microservices, MCP provides the same abstraction layer.

Think about what Kafka did for service communication. Before Kafka, you had:

- Point-to-point integrations becoming spaghetti

- Tight coupling between producers and consumers

- No good answer for "who needs to know about this event?"

Kafka didn't force you to restructure your services. It gave you a better communication primitive—the event stream—that worked regardless of your architecture.

MCP is doing the same thing for human-AI integration. Before MCP, you had:

- Custom integrations for every AI tool

- Tight coupling between AI capabilities and data sources

- No good answer for "how does an AI agent access this system?"

MCP provides the communication primitive—the protocol for tool invocation and context sharing—that works regardless of your underlying architecture.

If you want to dig deeper: Anthropic's MCP documentation is the canonical reference. For the "MCP as LSP for AI" framing, check out the discussions in early adopter communities. The parallels to Language Server Protocol are striking—same pattern of decoupling clients from servers through a standard protocol.

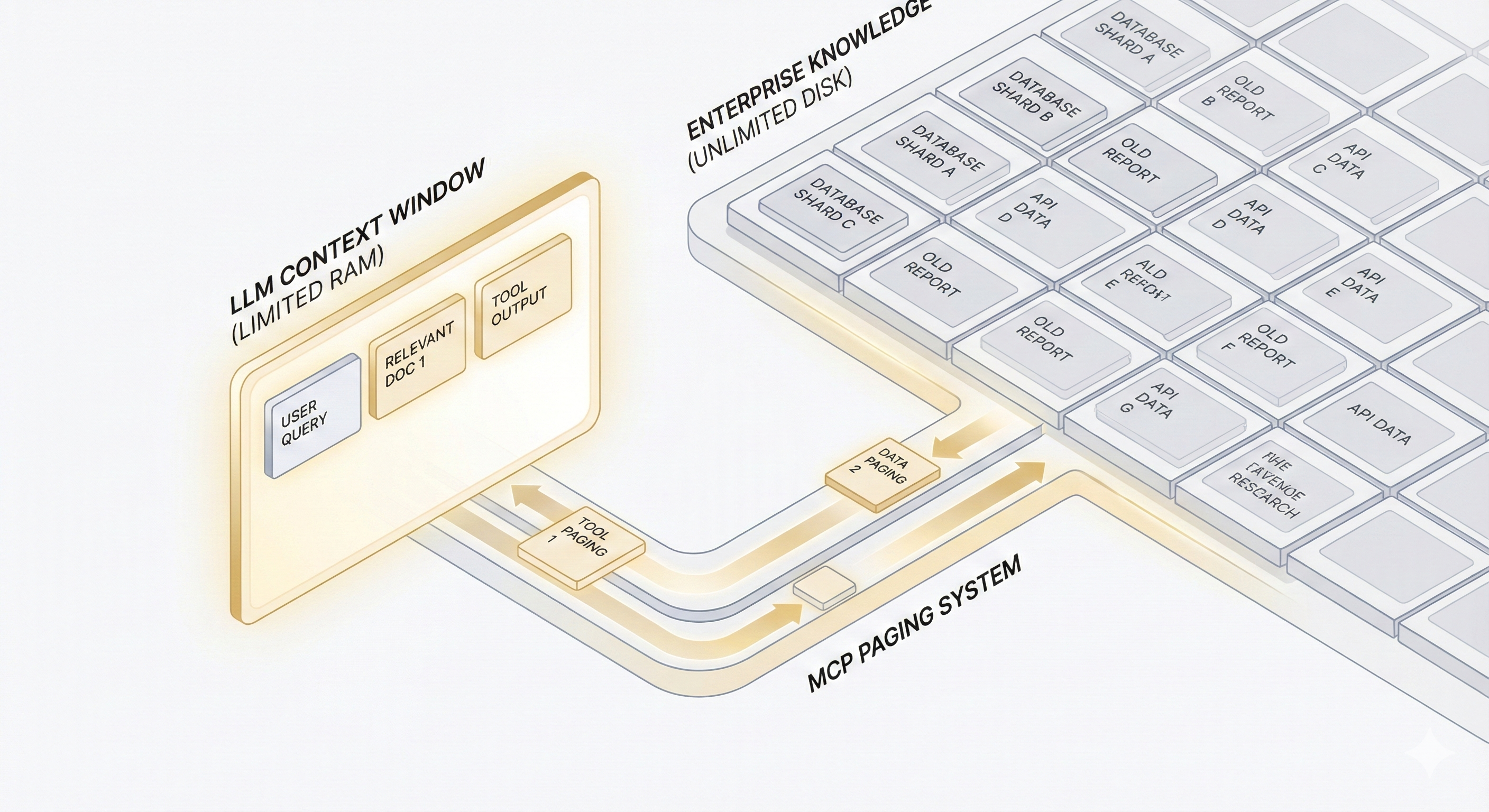

The Context Window Is the New RAM

Here's the deeper technical shift that most people miss because they're too busy arguing about architecture.

We're optimizing for a fundamentally new scarce resource.

In the '90s, I was optimizing for RAM. You paid attention to every memory allocation because 256MB was expensive. In the 2010s, using Yahoo's Hadoop clusters, we optimized for network bandwidth. Now? The constraint is the context window—how much information an AI can hold in its "working memory" at once.

This changes everything about how we build systems.

MCP isn't just a communication protocol. It's a paging system for LLMs.

Think about how your OS works. It doesn't load your entire hard drive into RAM and hope for the best. It moves data between slow storage and fast memory in pages—bringing in exactly what's needed for the current task, swapping out what isn't.

MCP does this for AI agents. Without it, we try to stuff everything into the initial prompt—the brute force approach I've watched teams attempt for the past two years. "Just cram the entire database schema into context." "Just include all our API docs up front." It doesn't scale.

With MCP, the agent becomes an active manager of its own memory. It pulls in exactly what it needs when it needs it. This is the shift from static context (traditional RAG—"here's every potentially relevant document") to dynamic orchestration ("let me query the exact data I need for this specific decision").

What this means for your architecture

Your systems don't need to be "AI-ready" by exposing everything upfront. They need to be "query-ready"—able to answer specific questions on demand.

I'm working with clients right now who are rebuilding their entire data layer to be "AI-compatible." Stop. You don't need to. MCP servers become the interface between an AI's limited working memory and your organization's unlimited stored knowledge.

The companies that figure this out first will have AI agents that can reason across their entire enterprise without drowning in noise. The ones that don't will keep hitting context limits and wondering why their AI initiatives feel so constrained.

I've seen both scenarios in the past six months. The difference in what's possible is stark.

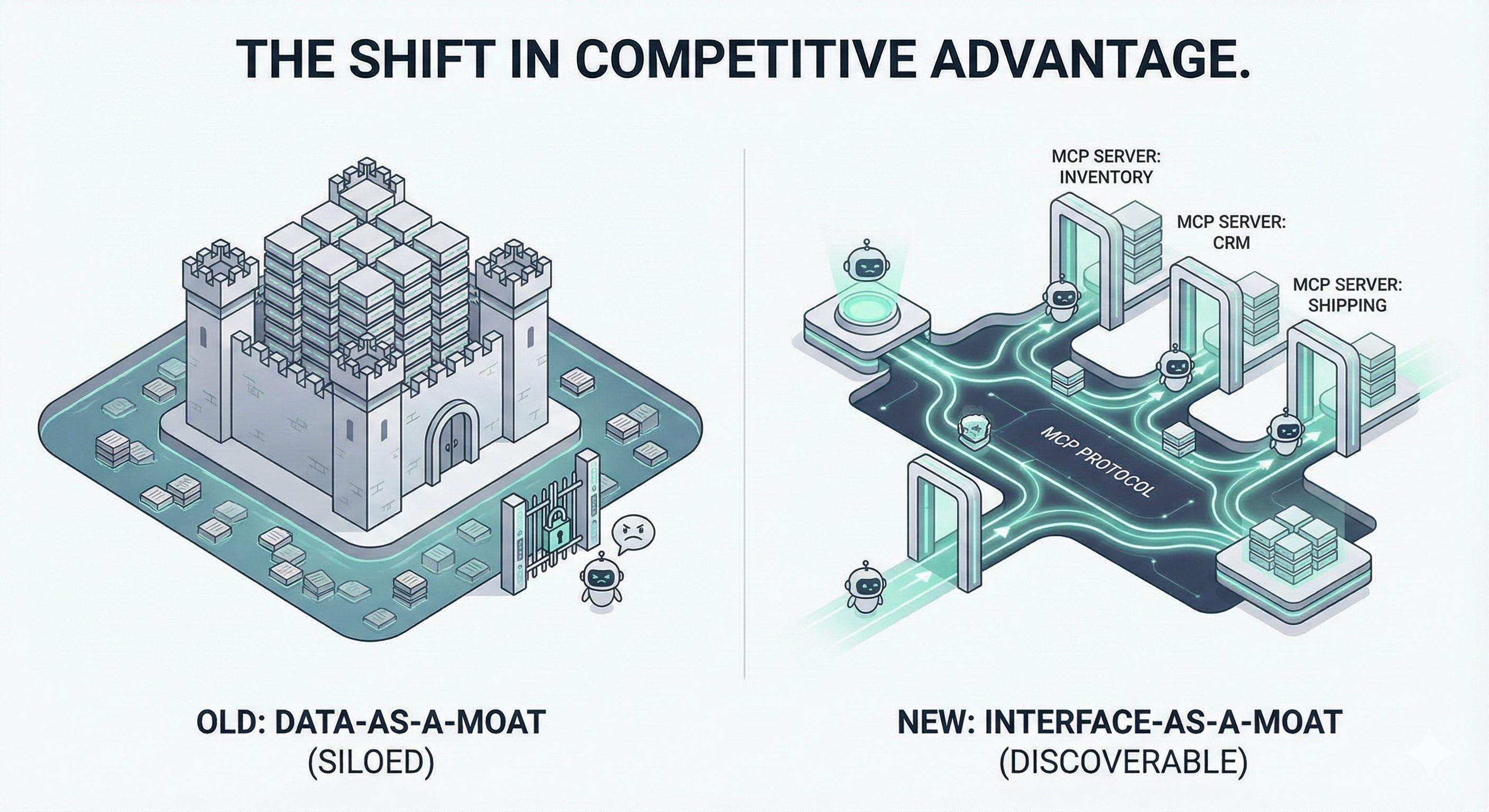

From Data-as-a-Moat to Interface-as-a-Moat

For years, the competitive mantra in tech was "whoever has the most data wins." Build the biggest dataset, train the best models, create network effects that compound your data advantage.

MCP is quietly flipping this assumption.

I'm watching this happen in real-time with clients. In an AI-orchestrated world, data that isn't discoverable by a protocol is invisible—and therefore worthless to AI agents.

It doesn't matter if you have the world's best customer analytics database if an AI agent can't query it through a clean interface. Your competitor with worse data but better APIs just won the RFP because the procurement AI could actually talk to their systems.

The shift: Companies are about to stop competing on who has the biggest data warehouse and start competing on who has the most agent-friendly interfaces.

Think about this from a customer's perspective—actually, think about it from an AI agent's perspective, because that's increasingly who's making the initial filtering decisions:

Your competitor's systems have MCP servers. An AI agent can query inventory, check pricing, place orders, track shipments—all through natural language. Clean, fast, reliable.

Your systems require custom API integration, manual data export, or worse, phone calls and email.

The agent will increasingly route business to your competitor. Not because of data quality. Because of interface accessibility.

What this means for strategy

I'm having these conversations with C-suites right now:

- Your internal tools need to be "AI-discoverable"—not just for your employees, but because that's how they'll interact with them

- Your partner integrations need to speak MCP, or you'll lose to competitors who do

- Your product APIs need to be agent-native, or you'll watch AI-powered competitors eat your market share

The companies I'm advising are already asking: "How do we become the default choice for AI agents in our category?" It's ATS compliance for companies not resumes—keyword-optimize your entire business so agents pick you, not your competitor.

That's a fundamentally different question than "How do we get more users?"—but it's rapidly becoming the more important one.

We're entering an era where interface quality matters more than data quantity. The best data in the world doesn't help if the AI can't get to it cleanly.

The Death of the Router-Manager

Now let's talk about what this means for organizations—and it's going to make some people uncomfortable.

Much of middle management is human routing: taking a request from a VP, translating it into engineer-speak, fetching a report from three different systems, reformatting it, and passing it back up. I've watched this play out for 25 years, and MCP puts real pressure on that model.

Let me be clear: MCP doesn't kill (good) management. It kills routing.

If your value as a manager is "I know which Slack channel to ask" or "I'm the person who can interpret this dashboard for executives" or "I translate between business and engineering"—MCP is automating a significant part of your current role. The AI can now query the systems directly, synthesize the data, and present it in whatever format the requester needs.

But if you're the manager who can say "here's why that quarterly metric is actually misleading" or "we should measure this completely differently" or "here's how we develop Sarah from mid-level to tech lead" or "I've seen this pattern before and here's what actually matters"—you just became 10x more valuable.

Because the AI freed you from information shuffling, and information shuffling was never the valuable part of management anyway.

The managers I admire and respect—the ones with deep domain expertise, who are exceptional at talent development, who make their teams better and more capable—they're about to have their leverage multiplied. They can finally focus on the work that actually requires human judgment and experience.

The managers who keep their teams down to make themselves look important, who hoard information as a power base, who add process to justify their existence—they're about to discover that organizations can see right through them when the AI removes their monopoly on information flow.

MCP doesn't just enable conversational ops. It exposes who was adding value all along.

The Real Paradigm Shift: Conversational Ops

Here's where it gets interesting—and here's where most of the current MCP discussion completely misses the point.

MCP enables what I call "conversational ops"—the ability for non-technical stakeholders to interact directly with systems that were previously locked behind engineering gatekeepers.

I've watched this play out across multiple client engagements. Stop imagining abstract "AI assistants." Start imagining:

- Your marketing director queries production metrics in natural language at 9am before the campaign launch—without filing a ticket, without waiting for a dashboard, without a data analyst translating the request

- Your operations manager triggers infrastructure scaling at 10pm on Thanksgiving Eve because they just got a call that Black Friday traffic is spiking early—no JIRA ticket, no on-call engineer woken up

- Your product manager gets real-time insights across five previously siloed systems during a customer call—and can answer the question before the customer hangs up

- Your finance lead kicks off cross-functional workflows through conversation instead of three days of email threading to coordinate a simple approval

This isn't about consolidating your architecture. It's about adding a human-interface layer that can orchestrate across it.

The deeper shift nobody's talking about

We've spent decades building APIs that developers love but business users can't touch. Then we built dashboards and BI tools to bridge that gap—but they're still fundamentally limited by what engineers pre-configured six months ago. As much as a love my dc.js interactive dashboards, there is only so much data and dimensions that we can pre-load.

MCP flips this. The AI becomes the interface layer between human intent and technical implementation. Your architecture stays distributed. Your services stay independent. But now anyone who can articulate what they need can potentially get it done.

Here's what most MCP discussions miss: conversational ops only works at scale with the right governance model.

You can't just give everyone access to everything and hope AI figures out the permissions. But you also can't treat IT like a gatekeeper in a world that moves at 1,000 tokens per second.

Start permissive—give access, monitor after the fact. Dean Stoecker built a multi-billion dollar category at Alteryx by turning IT into 'Air Traffic Controllers' rather than gatekeepers. The goal isn't just to see what happened, but why. Kafka gives you the data stream; MCP gives you the Intent Stream—the prompt that led to the action.

Don't repeat the 'Governance Theater' of the 2010s. For enterprise security teams, look at the 'Break-Glass' model used in healthcare: it's better to have a permissive system with a perfect audit trail than a restrictive system that forces your best people into 'Shadow AI' blind spots. Usage creates the roadmap for governance, not the other way around.

Service Size Doesn't Matter

We had Hadoop before Matei created Spark and roped in my other Berkeley classmates.

We had message queues and integration spaghetti before LinkedIn created Kafka.

We had database append-only logs before we had immutable blockchain.

MCP today feels like Hadoop in 2008. It works. It's useful. It solves real problems. But we're still writing too much boilerplate, thinking too hard about the plumbing.

Matei and the team saw Hadoop and asked "what if we made this actually pleasant to use?" That question gave us Spark—the same distributed compute idea with a 100x better abstraction—and speed.

Someone's going to look at MCP and ask the same thing. They'll build the abstraction layer that makes human-AI orchestration feel as natural as Kafka made event streaming feel.

If this plays out the way I expect, here's the shape of it:

- Intent streams: Conversations as first-class event logs that downstream systems can subscribe to, replay, and learn from—the Kafka topics of human intent

- Domain toolkits: Pre-built MCP orchestration patterns for FinOps, DevOps, SecOps that compose primitives into reusable playbooks

- Meta-protocols: Higher-level frameworks that sit on top of MCP the way Spark sits on top of Hadoop—making the 80% use cases trivial while keeping the escape hatches for the 20%

MCP is establishing the primitives. My bet is that the next wave builds the elegant abstractions on top.

What's Happened Since (2025 Update)

I started tinkering with MCP's potential in late 2024. By early 2026, we're seeing the maturity inflection I predicted starting to materialize.

What felt like Hadoop in 2008 is already approaching Spark-level polish:

- OpenAI added MCP support, validating it as a cross-provider standard

- Microsoft integrated MCP into Windows 11 for desktop AI orchestration

- Enterprise adoption accelerated as the protocol proved itself in production

- Code execution enhancements dramatically improved token efficiency

- In December 2025, Anthropic donated MCP to the Linux Foundation's Agentic AI Foundation, co-founded with OpenAI and Block—a clear signal that this is becoming industry infrastructure, not a single-vendor protocol

The pattern is playing out as expected: start with a crude but directionally correct protocol, watch early adopters validate the model, then see rapid standardization as the value becomes undeniable.

If you dismissed MCP as "too early" six months ago, you risk falling behind faster than you expect. The companies that moved early are now building competitive advantages on top of this foundation while others are still debating whether to start.

2026 Early Signals: Security and Scale

As MCP moves from early adopter experiments to production deployments, we're seeing the growing pains you'd expect from any infrastructure-grade protocol.

Security is getting real attention. In January 2026, CVE-2026-0621 exposed a ReDoS (Regular Expression Denial of Service) vulnerability in the MCP TypeScript SDK. This wasn't catastrophic, but it's a reminder: as MCP becomes the control plane for business operations, security hardening becomes critical. The governance model I discussed earlier—start permissive, monitor closely, then tighten based on real patterns—needs robust audit trails and vulnerability management from day one.

The ecosystem is exploding. The MCP SDK has surpassed 97 million downloads. There are now 75+ connectors spanning everything from databases to cloud services to internal tools. This is the "Cambrian explosion" moment—lots of experimentation, some winners emerging, standards starting to solidify.

Multi-vendor support is accelerating. Google and AWS are both investing in MCP compatibility. This matters enormously for SMBs and mid-market companies who couldn't afford vendor lock-in. When your AI orchestration layer works across Claude, GPT, Gemini, and whatever comes next, you're building on stable ground instead of betting on a single provider.

The open-source evolution through the Linux Foundation is reducing silos and enabling broader innovation. MCP is becoming what Kubernetes became for container orchestration—the layer where everyone agrees to interoperate, which frees them to compete on what's built on top.

Don't Get Caught in the Swing

The pendulum will keep swinging. The discourse will keep overshooting in both directions. That's how large technical communities work—the middle doesn't get conference talks or viral tweets.

But as technical leaders, our job isn't to ride the pendulum. It's to recognize patterns, separate signal from noise, and make pragmatic decisions.

MCP isn't going to force you back to monoliths. It's not going to save your microservices architecture either. It's a communication protocol that happens to be really good at letting humans orchestrate complex systems through natural language.

Use it where it makes sense. Ignore the architectural holy wars. And pay attention to what gets built on top of these primitives.

That's where the real innovation happens.

What to Do Monday Morning

Stop reading. Start doing. Here's your playbook:

1. Find your shadow AI usage

Walk around. Ask your team: "What are you copy-pasting into ChatGPT or Claude right now?"

I did this with a startup last month. Their sales team was screenshotting Salesforce data, pasting it into Claude, asking for forecasts, then manually typing the results back into their CRM. Every. Single. Day.

That's your first MCP server. Build the thing they're already hacking together manually.

2. The three-sentence test

Pick your most valuable internal tool. The one that only three people really understand. The one that causes bottlenecks when those three people are on vacation.

Now explain it to a new hire in three sentences. Can't do it? That tool is too complex to MCP-enable safely. Fix the tool first, or accept that it stays gated.

Can do it? Those three sentences are your MCP tool definitions. Write them down. That's your spec.

3. Stop the service size debate

I've sat through hundreds of meetings about whether to split Service X into Services Y and Z. You know how many of those meetings mattered? Zero.

Here's what matters: Can your services describe their capabilities in a machine-readable format? If not, fix that. The size discussion is a distraction.

4. Log everything your AI touches

Start today. Every natural language query hitting your AI prototypes. Every tool invocation. Every failure.

This is gold. This is your roadmap. This is what people actually need versus what you think they need. I've watched teams waste months building features nobody asked for because they never bothered to check the logs.

5. Do the uncomfortable audit

Which of your managers exist primarily to move information from System A to Person B?

I'm not saying fire them. I'm saying retrain them. Invest in domain expertise. Invest in talent development. Because MCP is going to put pressure on that role, and you'd rather be ahead of it than surprised by it.

The pendulum will keep swinging. The discourse will keep overshooting.

But you don't have to ride it.

Recognize the pattern. Separate signal from noise. Make the pragmatic moves that position your organization for what's actually coming—not what the conference circuit is hyping this quarter.

Further reading: If this resonates, you might enjoy Martin Fowler's writing on microservices patterns and the Monolith First principle. For the broader pendulum pattern in tech, Gartner's hype cycle research documents this overshoot dynamic across technologies.

For MCP specifically: Anthropic's late-2025 announcements on the Linux Foundation donation and protocol enhancements detail the ecosystem evolution. The Awesome MCP Servers repo tracks the growing ecosystem of 75+ connectors. And for security practitioners, monitoring the CVE database for MCP-related vulnerabilities is essential as the protocol matures.

Publish January 2025